Introduction

Imagine running AI models directly on your Android phone, even without a dedicated GPU. Thanks to llama.cpp, a lightweight and efficient library (used by Ollama), this is now possible! This tutorial will guide you through installing llama.cpp on your Android device using Termux, allowing you to run local language models with just your CPU. You don’t believe me? Just try it!

Why llama.cpp on Android?

- Privacy: Keep your data local and avoid sending sensitive information to cloud servers.

- Offline Access: Run AI models even without an internet connection.

- Accessibility: Utilize the processing power of your Android device for AI tasks.

- Learning: explore the inner workings of LLMs.

Prerequisites

- An Android device (preferably with sufficient storage space).

- A stable internet connection for initial setup.

Step 1: Installing Termux

Termux is a terminal emulator for Android that provides a Linux-like environment.

- Download Termux: Install Termux from F-Droid or the Google Play Store. (F-Droid is recommended for latest updates)

- Open Termux: Launch the Termux application.

Step 2: Setting up the Environment

Update Packages:

apt update

apt upgrade -y

Code language: Bash (bash)Install Essential Tools:

apt install git cmake ccache

Code language: Bash (bash)Step 3: Cloning and Building llama.cpp

Clone the Repository:

git clone https://github.com/ggerganov/llama.cpp.git

cd llama.cpp

Code language: C# (cs)Update (Optional): If you’ve previously cloned the repository, you can update it with:

git pull origin master

Build llama.cpp:

cmake .

make

This might take a bit of time…

Step 4: Downloading an AI Model

You’ll need a GGUF format model. GGUF is a quantized format that allows for efficient inference on CPU.

- Choose a Model: Visit Hugging Face (e.g., unsloth/DeepSeek-R1-Distill-Qwen-1.5B-GGUF) and select a suitable model.

- Download the GGUF file: Download the Q4 or higher quantization file. For example: DeepSeek-R1-Distill-Qwen-1.5B-Q4_K_M.gguf

- Storage Access: To easily access downloaded files, grant Termux storage permissions: termux-setup-storage

- This will create a ~/storage/shared directory that links to your device’s shared storage.

- Move the Model: Move the downloaded model to a convenient location, such as ~/storage/shared/Download/AI/

- Rename the file if needed to have something shorter like: deepseek-r1q4.gguf

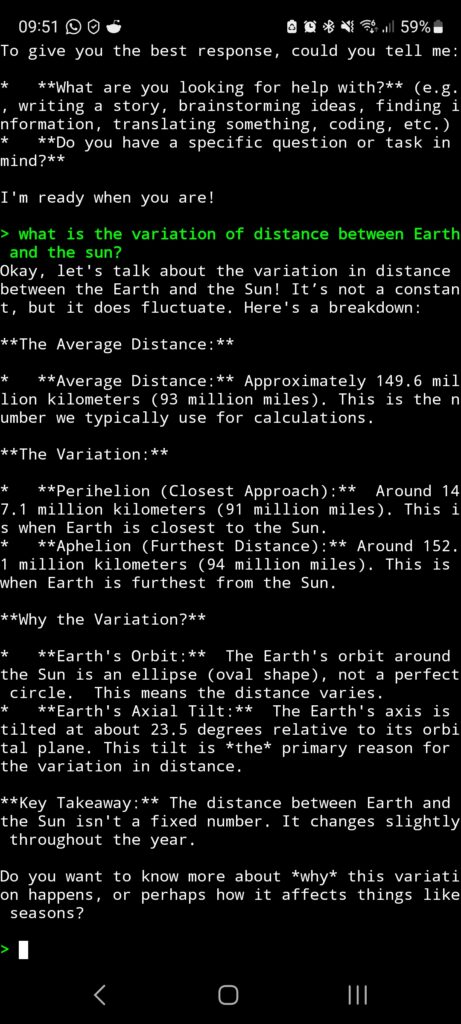

Step 5: Running the Model

Basic Usage:

./bin/llama-cli -m ~/storage/shared/Download/AI/deepseek-r1q4.gguf -p "you are a helpful assistant" -b 7 -i --color

Code language: Bash (bash)

Tips and Troubleshooting

- Model Compatibility: Ensure the model is in GGUF format.

- Memory Usage: Larger models require more RAM. If you encounter issues, try a smaller model or lower the batch size.

- Updates: Regularly update llama.cpp with ‘git pull origin master’ to benefit from improvements and bug fixes.

- Performance: Performance will vary depending on your device’s CPU.

- Quantization: Q4 quantization is a good balance between performance and accuracy.

- Accuracy: The smaller the model is the less accurate it will be. But latest models seem to be better and better.

Conclusion

You’ve successfully installed and run llama.cpp on your Android device! Experiment with different models and prompts to explore the capabilities of local AI. This opens up a world of possibilities for offline AI applications and learning.

Further Exploration

- Explore different GGUF models on Hugging Face (at the time of writing this post, I would recommend gemma-3-1b-it-Q4_K_M.gguf).

- Learn more about llama.cpp’s command-line options.

- Integrate llama.cpp into your Android apps.